Evaluating Digital Libraries With Webmetrics

|

The iSchool, Drexel University |

"WGBH Interactive |

ABSTRACT

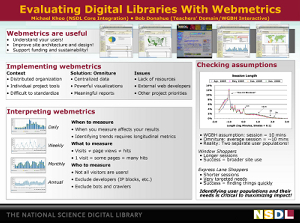

We report preliminary lessons from a year of webmetrics research with two digital libraries. Despite the apparent ‘plug-and-play-and-report’ nature of webmetrics tools, much work was required to extract useful data from the tools used.

For a larger view of Figure 1, click here.

Webmetrics measure website use and provide insight into users’ behaviors. They can be used to support website design and justify funding [2]. We report initial findings from a year of webmetrics research with the National Science Digital Library (NSDL: http://nsdl.org/) and WGBH Teachers’ Domain (TD: http://www.teachersdomain.org/). Despite the apparent ‘plug-and-play-and-report’ nature of webmetrics tools, much work was required to obtain useful data.

Case 1: Implementing Webmetrics

NSDL is a federated digital library program composed of many individual projects. Each project runs its own webmetrics tools. The National Science Foundation (NSF) is interested in aggregating webmetrics across these projects. However, it is difficult (if not impossible) to standardize data across all projects and tools. NSDL is therefore implementing a standard webmetrics tool across selected projects. This research has two main aims: to collect standardized metrics, and to provide proof-of-concept of webmetrics aggregation in a distributed networked organizational environment. The latter research question is also of interest for cyberinfrastructure development and evaluation.

The chosen webmetrics tool, Omniture (http://www.omniture.com), is a javascript page tagging tool that is implemented within the HTML of web pages. Omniture is expensive, but highly functional. Implementation requires access to site code. The NSDL implementation has encountered a number of obstacles, often traceable to a lack of resources at the project level. Several projects rely on part-time assistants who may not have the expertise to deal with Omniture. Projects with external web developers can have trouble accessing the project servers in order to implement the code. Project managers may not have the time to oversee webmetrics work, among all their other project work. Finally, new projects often have more immediate priorities than implementing webmetrics, and it is often the more mature projects that do implement. Overall, these initial findings support Lagoze et al.’s observations that successful technology implementation in a distributed organization requires the presence of a minimum set of skills and resources at each organizational node, with the absence of any one skill at a node hampering, sometimes severely, implementation at that node [1].

Case 2: Reporting Webmetrics

The basic ‘canned’ data obtained from webmetrics tools are useful, but they are just the starting point. TD has been refining Omniture data to produce more sophisticated reports of TD activity. This process includes defining specific unit(s) of analysis; tracking visitor paths; normalizing data to identify trends; accounting for anomalous data; and combining webmetrics with other data sources.

Defining specific unit(s) of analysis is important. The commonly quoted metric of ‘hits’ is unsuitable, as hits will include all files downloaded with each page, including embedded images. TD (and NSDL) are focusing instead on ‘visits,’ assuming that each visit represents a user trying to accomplish a task. Again, websites will often quote ‘page views’ as a metric, but we feel that the visitor path is more useful; a visitor may view 30 pages on a site, but path analysis may reveal repetitive and/or random behavior that suggests confusion, perhaps caused by bad site layout. Normalization is crucial to interpreting trends in the data; for instance, ‘visits per state’ is a significantly different metric to ‘visits per capita per state,’ and a decline in reported visits due to annual fluctuations (e.g. school vacations) is different to an unexpected decline. Finally, combining webmetrics with other data sources can add value to webmetrics. For example, TD webmetrics showed the same user repeatedly logging on to the site over a minute or so; and analysis suggested that this was a teacher providing their class with a single log-in (perhaps to address privacy problems connected with registering children under the age of 13).

In summary, NSDL and TD carrying out ongoing research into developing useful webmetrics for digital library evaluation. Early results are presented in the poster. Further web metrics reports from NSDL are available at http://eval.comm.nsdl.org/reports.html.

References

[1] Lagoze, C., D. Krafft, T. Cornwell, N. Dushay, D. Eckstrom, & J. Saylor. Metadata Aggregation and “Automated Digital Libraries”: A Retrospective on the NSDL Experience. JCDL 06, pp. 230-239. ACM Press. http://doi.acm.org/10.1145/1141753.1141804

[2] Weischedel, B., & E. Huizingh. Website Optimization With Web Metrics: A Case Study. ICEC 06, pp. 463-469. ACM Press. http://doi.acm.org/10.1145/1151454.1151525