Patterns of Analysis: Supporting Exploratory Analysis and Understanding of Visually Complex Documents

Department of Computer Science

College Station, Texas 77843-3112 USA

Texas A&M University

1-979-845-3839

neal@csdl.tamu.edu

ABSTRACT

In visually complex documents, distinct layers of text and images form a representation in which spatial arrangement and graphical attributes provide components of the document’s meaning in conjunction with the actual words or images of the document. Effective support for analysis and interpretation of these documents requires features that help users to identify and represent relationships between a document and supporting material and to decompose the document to help clarify spatial, temporal, or other characteristics that affect its meaning.

Spatial metaphors for interacting with complex information structures and emerging knowledge offer a promising approach to designing interactions strategies for these documents. The unique requirements of this domain, however, require that we reexamine the affordances traditionally provided spatial hypermedia systems. I propose to investigate support for decomposing document objects into component parts, identifying overlapping and interrelated information layers, and expressing relationships within and between documents. I propose to identify a set of “interaction patterns” from the results of a series of semi-structured ethnographic interviews. I will use these patterns to design, implement, and evaluate extensions to CritSpace, a Web-based application that has been designed to support scholarly interaction with visually complex documents and other resources

ACM Classification: H.1.2 [User/Machine Systems] Human factors, H.5.2 [User Interfaces]: Interaction styles, H.5.4 [Hypertext/Hypermedia]: User Issues

General Terms: Design, Human Factors.

Keywords: information workspace, analysis, visualization, spatial hypermedia, cultural heritage, emergent structure. Digital libraries

1. INTRODUCTION

Documents express information as a combination of written words, graphical elements, and the arrangement of these content objects in a particular media. Digital representations of documents—and the applications built around these representations—tend to divide information between primarily textual representations on the one hand (e.g., XML encoded documents) and primarily graphical representations on the other (e.g., facsimiles). This dichotomous representation alternatively limits the ability to represent visually constructed information or the availability of system-level support for interacting with the document’s content.

I adopt the perspective that the text/image distinction forms two ends of a continuum rather than two poles of a dichotomy. That is, all documents convey information both in terms of the logical, textual content as well as the way in which that textual content is arranged on a page alongside (and structured by) other graphical features of the document. The degree to which relevant information is represented either textually or visually varies based on the characteristics of individual documents and the needs of those using the documents.

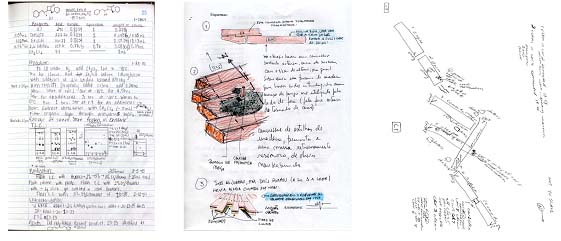

In visually complex documents, distinct layers of text and images form a representation in which spatial arrangement and graphical attributes provide components of the document’s meaning in conjunction with the actual words or images of the document. Construction of visually complex documents is commonplace—capturing laboratory observations in a graphical table is one example—and may involve multiple sessions/authors over time—for example annotation or editing of a previously-prepared document. A few examples are shown in figure 1. Decomposition of visually complex documents into their component parts is often required for understanding or to enable analysis; such decomposition may be spatial, temporal, or may reflect other characteristics. Visually complex documents are important in most domains of scholarship and practice as almost all researchers and practitioners deal with documents that include spatially significant elements such as text, sketches, annotations, shorthand, and personal or shared visual notations.

Recent results in document image analysis have led to powerful tools for identifying and labeling content in a variety of domains [26]. The challenges for providing electronic access to documents, however, are not found merely in the algorithmic complexity of content recognition. Fundamental research is needed into the human interfaces used to deliver these documents to those who will read and edit them [3].

Figure 1:

Examples of visually complex documents: a chemistry lab notebook, a

nautical archaeology dive log, notes taken during an urban search and

rescue exercise.

(Click here for a larger view)

For my dissertation, I propose to design, implement, and evaluate a Web-based interface that will support users as they work to analyze and understand visually complex document. This interface will rely on the direct manipulation of document images and related data in a visual workspace. Users will be able to describe relationships between documents and parts of documents through the spatial arrangement of objects in a two and a half dimensional workspace and the manipulation of the visual properties of those objects (for example, background and border color, border thickness and style). In addition to expressing relationships informally through visual properties, users will also be able to define explicit relationships (for example, identifying one document as being a previous state of a document). This system will provide support for the exploratory work practices needed during the early stages of analyzing visually complex document, while enabling formal expressions of structure to inform tools that assist with specific tasks.

Working with visually complex documents is a common practice in many different domains. Consequently, a key contribution of my research will be the development of a set of “interaction patterns.” Adopting the approach taken by Christopher Alexander in his pioneering work [2], software engineers [17] and more recently interaction designers [58][60], have employed the notion of design patterns—short descriptions of solutions to common problems—with great success. Building on this technique, I will conduct an ethnographic user study in order to identify patterns that describe solutions to high-level analytical tasks common across several disciplines. These patterns will serve as the basis for developing the proposed system.

2. PRIOR WORK

Prior work may be divided into two overlapping areas: research on how to model, encode, and identify document content and research that seeks to understand how people interact with complex information structures and design tools that enhance these interactions.

2.1 Document Content

Text Encoding: Current approaches to text encoding are grounded in the controversial claim that texts "really are" ordered hierarchies of content objects (OHCO) [8]. When applied to the task of developing Text Encoding Initiative (TEI) guidelines [53] it became apparent that hierarchical structures are unduly restrictive. Revised forms of the model were quickly introduced (OHCO-2 and OHCO-3) though these also broke with the non-hierarchical structures found in many documents [44]. The problem of non-hierarchical content has received considerable attention [9] and several strategies have been proposed to deal with this complexity including milestones, fragmentation, and out-of-line markup [38][55].

Several projects work to move beyond hierarchies as a formal model for text encoding. Two notable efforts are Generalized Ordered-Descendant, Directed Acyclic Graphs (GODDAG) [54] and Layered Markup and Annotation Language (LMNL) [57]. GODDAG provides the formal basis for both the MECS [52] and the TexMecs [22] encoding schemes. LMNL describes a data structure based on Core Range Algebra [40]. Canonical LMNL for XML (CLIX) provides an XML-based representation of LMNL [9][41].

Image Based Models: A more fundamental objection to OHCO specifically, and to text encoding more broadly, has been that these techniques fail to represent the visual properties of documents. Reflecting on the original proposal of the OHCO model, [10] notes that texts are comprised of an inseparable mixture of text and graphics. [47] raises the concern that OHCO assumes the salient features of a document are arhetorical, ignoring the communicative role played by document layout and other non-linguistic elements of the text.

The need to provide access to the visual form of a document is a well-recognized source of debate within the digital humanities community. Several notable projects, including the Rossetti Archive [37] and the Blake Archive [12], have developed highly successful digital collections that rely on document images as the central point for accessing their text collections.

Several projects have produced toolkits to assist editors establish tight linkages between texts and images. [28][29] describe a system to support text-image coupling for literary sources, focusing on original manuscript documents and other handwritten sources. Their approach establishes linkages between transcriptions and images of a text by augmenting the TEI pointer semantics. The ARCHway project has developed the Edition Production & Presentation Technology toolkit to facilitate manual encoding of document images [14]. They introduce folio R-trees to allow text-to-image mappings in which either the text or image can be the primary artifact, depending upon user needs [7][25].

Document Image Analysis: The library and information sciences community has focused on encoding standards that support long-term document preservation and dissemination, relying on human effort to provide the required transcriptions and metadata. The engineering community, on the other hand, has focused on developing robust methods for automatically recognizing document structure and content.

Early Document Image Analysis (DIA) research dealt primarily with modern printed material such as journal articles [39]. Building on the success of this work, recent efforts have sought to extend these techniques to study freeform handwritten materials [26]. For specialized domains such as processing bank checks or reading postal address on envelopes, this work has proven highly successful [23][56].

Generalizing these results to the task of processing full-page, handwritten texts has proven to be more challenging. Recent research into identifying basic elements within these documents has focused on improved adaptive thresholding [15][18][43], restoration of historical damage [13], identifying words [32], separation of overlapping words [5], and identifying lines of text [24][30]. Other work has addressed challenges such as grouping text lines and regions [62], aligning transcriptions with images [45], and identifying regions of interest [63]. These results, while currently inadequate for a fully automated system, provide key enabling technologies to support interaction with documents.

2.2 Users & Interaction

Spatial Hypertext: Dominant hypertext systems, such as the Web, express document relationships via explicit links. In contrast, spatial hypertext expresses relationships by placing related contents near each other on a two and a half-dimensional canvas (two dimensions, with the layering support for overlapping objects) [34]. Based upon observations of use of prior hypertext systems like Aquanet [33], the Virtual Notebook System [48], and Notecards [59], Marshall, et al. designed VIKI [35], a system for expressing relationships spatially. VIKI included parsers capable of recognizing relationships between objects such as lists, list headings, and stacks. It also supported the creation of hierarchical spaces, called collections, that could include an arbitrary set of objects within. The Visual Knowledge Builder (VKB) [51], a second-generation hypertext system, has extended VIKI’s features by incorporating structured metadata attributes for objects, search capability, navigational linking, and the ability to follow the evolution of the information space via a history mechanism. Tinderbox [4] allows its users to “make, analyze and share notes” by incorporating these “incrementally and naturally in an organic, unplanned, spatial hypertext." While initial spatial hypertext systems did not support multiple roles, such as authors and readers, recent advances in VKB support separate roles [16][31].

Incremental Formalization:While computer systems work well with structured information, humans are often unwilling, and sometimes unable, to provide formalized knowledge representations via a software interface directly. The approach of incremental formalization [49] allows users to provide unstructured information to the computer and helps them formalize it gradually, often over an extended period. Systems such the Hyper-Object Substrate (HOS) [49], and spatial hypertext systems such as VIKI and VKB embody this approach. Incremental formalization helps users structure their knowledge at their convenience, allowing them to delay formalization decisions until they are willing to commit to these decisions. In addition to the spatial metaphor, VKB supports activity links as a mechanism for theorizing and interpreting what particular portions of activity in the document history mean, allowing the expression of formal models for describing these activities over time [21].

Annotations and Interaction: While users are unwilling to formalize their knowledge early on when interacting with a document, they do need features for thinking about document components, representing formative thought and communicating these thoughts to their collaborators and peers. Systems like XLibris [46] promote the use of annotations for expressing informal thought while reading. XLibris allows users to annotate documents displayed on a tablet PC via pen-based interaction. The XLibris project has explored free-form ink annotations in the context of legal research [36] and has explored mechanisms for robust positioning of these annotations in the face of changes to the underlying document [20]. Annotations indicate points of interest to the user in a document and serve as an interface to the corpus for locating documents of potential interest [18]. This novel mechanism of expressing interest in portions of a document by interacting with it has also been investigated in the context of the Classroom 2000 project [42], where interaction points act as linkages between several documents.

2.3 Limitations

Current solutions suffer from a number of limitations. While encoding standards such as the TEI and hypertextual archives of document images and supporting data have been used with great success to publish many high-quality electronic editions, these tools offer little support for early stage, exploratory research involving visually complex documents. The formal structures inherent by these approaches require that those who use them already have a well-developed understanding of the documents they wish to represent. For example, speaking to researchers working with cultural heritage materials, Lavagnino observes, “the appropriate scholarly tools for the early exploratory stages of a project may be pen and paper, or chalk and a large blackboard” [27]. The level of formality required by most tools makes creative, exploratory decomposition of documents impractical.

At the other end of the spectrum, spatial hypertext tools support informal knowledge representation, ambiguity, and incremental formalization, but are not easily adapted to working with large, complexly structured documents at various levels of granularity. Most published work with spatial hypertext has represented content nodes as relatively small chunks of information—an image or a few sentences—with little or no internal structure. By contrast, a visually complex document may be an entire lab notebook or a multi-volume book. While spatial hypertext presents a useful starting point to think about developing tools to support exploratory analysis, this metaphor will need to be rethought and extended to adequately represent the unique characteristics of this domain.

3. BACKGROUND

In order to support interactive understanding of complex documents, I have developed the underlying infrastructure for this project in conjunction with other members of the Center for the Study of Digital Libraries. After evaluating the potential for using an existing system, we decided to build a new Web-based application called CritSpace. While existing spatial hypertext systems support the informal interactions and incremental formalization required for this project, most are intended for personal use, are optimized for work with lightweight hypertext nodes (a few words or a small image), and are implemented as stand-alone applications. These systems specialize in the expression of inter-relationships by juxtaposition; these mechanisms are useful but insufficient for expressing the variety of relationships found in visually complex documents.

The realization of our long-term goals requires a system that can be used collaboratively and is widely available for personal as well as shared annotations over high-quality representations of rich documents. To meet our design criteria, CritSpace, shown in figure 2, is implemented as a Web-based system that can interact seamlessly with digital document collections. It currently implements a small set of spatial hypertext features, such as free positioning of objects, whose visual properties such as background and text color, font, border can be modified. It also includes basic features for working with digital facsimiles. CritSpace allows users to arrange facsimile document images in a two dimensional workspace and resize and crop these images. Users may search and browse the holdings of an associated digital library directly from the interface, adding related images and textual information as desired. In addition to content taken from the digital library, users may add links to other material available on the Web, or add their own comments to the workspace in the form of annotations. CritSpace provides us with a strong foundation for incrementally building features that support document analysis and interpretation. The independence of the tool will enable us to tailor it to meet needs as they are identified and to serve as a platform for testing specific research questions.

CritSpace is designed to work in conjunction with extensible server-side modules. We have developed two: an image management system and a general-purpose facsimile edition toolkit, AFED (Annotated Facsimile Edition). The image management system facilitates rapid integration of new image processing modules and metadata management tools that support user customization. AFED provides a conceptual model and implementation for the macro-level structure of facsimile editions that facilitates multiple collations of images and comparison between different versions of a document [1]. These components have been developed with a view toward interoperability from the outset. While much work remains, the core framework for the system is in place.

Figure 2:

The CritSpace interface showing a manuscript text from the Picasso

Project along with reader notes, related artwork, documents, and

document snippets, and the transcription. Image metadata and search

results are displayed in a bar on the right hand side of the interface.

(Click here for a larger view)

For most people working with visually complex document, CritSpace embodies a new paradigm of user interfaces. In order to develop intuition about how users might react to this system initially, and what features need to be clarified or extended, I conducted a pilot user study with a small number of researchers in cultural heritage-related disciplines. This study has helped to refine and focus our attention on three main tasks: annotation, decomposition, and re-contextualization. The observations made during this study have also been used to inform the design of the more formal user studies described in the following sections.

4. PROPOSED WORK

For my dissertation, I propose to design, implement, and evaluate a Web-based application to support the analysis of visually complex documents. To achieve this, I will adopt a human-centered design methodology. The design of system features will be informed by practitioners who create and use these documents. Throughout the process, I will receive feedback from the target user groups via in-situ observation, questionnaires, free-form interviews, and hands-on use of developed features. Researchers and experts from a broad spectrum of work areas have expressed a desire to assist us in this endeavor. These include researchers from the arts and the humanities, archaeology, and physical and life sciences. Texas Task Force 1 and Search Dog Network members who participate in search and rescue operations also support this project. I will use input from some of these groups to ensure that our solutions meet the disparate needs of various communities and its use in different situations. Other groups will participate in the summative evaluation to assess the applicability of my work to new domains.

Based on observations made during my pilot study, I will structure my research initially around three broad areas: decomposition of documents into their constituent parts, re-contextualization of documents and parts of documents, and annotation of individual objects, groups of objects and analytical process. My design and implementation work will be driven by the development of interaction patterns that describe the support needed for document analysis tasks in each of these three areas. These patterns will be grounded in the results of formative, semi-structured interviews. Solutions will be implemented as extensions to the CritSpace application. These innovations will be evaluated in a series of focused case studies in which domain experts use the tools I develop in their own research projects.

4.1 Decomposition

Decomposition into component parts, either implicitly or explicitly, is a pre-requisite for interpreting visually complex documents. Our preliminary studies indicate that people use sophisticated strategies (often unconsciously) to identify significant content quickly and use that content to form an assessment of the document as a whole. For example, when shown a low-resolution image of a page chosen at random from a lab notebook, a scientist immediately noticed a diagram and commented that this particular experiment yielded good results.

For complex documents, identifying the relevant content and understanding the relationships between the various elements on the page is non-trivial. Often, content layers such as annotations or background images will overlap other elements. The draft manuscript of a poem by Pablo Picasso, shown in Figure 3, illustrates some of the complexities involved in decomposing documents. In this document, Picasso’s secretary has typed a previous draft manuscript. Picasso then added extensive annotations to the document, noting the date of these revisions, December 6th, 1935, at the top of the page. Later, on December 24th, having run out of space, he pasted the typed page onto a larger sheet of paper and added a second set of annotations in red.

Most current approaches to segmenting document images, both automatic and manual, assume that documents can be decomposed into geometric regions of homogeneous content. In visually complex documents, however, this is often not the case. This manuscript illustrates the multiple, visually overlapping layers of information that are conspicuously present in many documents.

Another source of complexity arises from the fact that individual content elements may belong to multiple layers. For example, in Picasso’s draft, the majority of the text on the page might be easily grouped as either part of the original, typed text, or the first or second layer of annotations. The date at the top of the page, “24 Dicembre XXXV,” however, plays a more ambiguous role. It may be seen as part of the second layer of annotation or it may serve as a sort of provisional title for this draft. Illuminated letters in medieval manuscripts are another example of this, fulfilling dual roles as both text and illustrations. This sort of ambiguity is characteristic of visually complex documents.

In addition to the visually apparent divisions of content, scholars may also be interested in identifying content based on the document’s history. For example, the title attributed to this document is Lengua de Fuego, even though this phrase is not present in the form shown here. “Lengua de fuego” was the opening phrase of an earlier state of the document, but in this form “de fuego” appears as the last word on the fourth line while “lengua” is still at the beginning of the first line.

Figure 3: An author’s manuscript with multiple layers of annotations.

(Click here for a larger view)

Readers may also propose the presence of information layers that were not created explicitly during the authoring process in order to assist in the task of interpreting documents. For example, when reviewing her field notes to track significant patterns, an anthropologist might wish to identify the various individuals who provided certain facts during a group interview, implicitly assigning responses from different people to different layers. Thus, the separation of information into layers may rely primarily on contextual knowledge and external data sources rather than the visual form of the document.

Consequently, identifying sub-document level content is not a simple process manual or automatic image segmentation. To the contrary, determining how to decompose the content of a document is a scholarly activity that requires support.

Based on the findings of my formative evaluation, I will distill users’ goal and methods for identifying content within documents into patterns of decomposition, paying special attention to the commonalities and distinctions across different domains. These patterns, in turn, will provide the basis to design and implement tools in CritSpace that help users decompose documents.

A key design question is how to develop tools to assist users in identifying document content. While manual identification may be feasible for many tasks including coarse-grained analysis of small collections, it quickly becomes prohibitively tedious and time consuming for larger collections or detailed analysis. Additionally, for tasks such as segmenting annotation layers based on ink color, computational support is required. Fully automated processing, however, is technologically infeasible and fails to account for the scholarly decision making required for many decomposition tasks. Consequently, I will work to develop a hybrid solution in that incorporates document analysis algorithms into end user interfaces in order to minimize hand coding and to enable tasks that are not possible through purely manual analysis.

4.2 Re-Contextualization

As users identify and decompose a document’s content, they also need to be able to re-contextualize that content quickly in order to facilitate comparison of related material and as a lightweight means of externalizing implicit knowledge during exploratory stages of research. Clipping a document page or an illustration from a book and placing it in the workspace as a reminder of (and link to) content that a user wants to remember is one example of this type of re-contextualization.

Another form of re-contextualization is the introduction of related material into the workspace. For complex information analysis tasks, developing an understanding of any particular detail requires an analyst to draw on a range of intricately interrelated background knowledge and supporting material. This requirement is evident in scholarly research. For example, in examining field notes recorded from an underwater dive site, a nautical archaeologist needs to reference area maps, historical shipbuilding treatises, related findings in the research literature, 3D models of ships, and photographs.

In my research, I will pay careful attention to the need to re-contextualize content objects identified during document decomposition; allowing users to place identified document fragments into new contexts in order to promote creativity in the early stages of design activities, record immediate observations, and capture free-form observations. By integrating full text search, metadata browsing, and other information retrieval tools, users will be able to find and integrate relevant content from related digital libraries quickly.

A key challenge in designing tools to support formative analysis is the need to allow users to work without the distractions introduced by requiring explicit expression of their knowledge early in the analytical process [50]. Such distractions interrupt the flow of creative work [6]. While computers require formal knowledge representations, human communication and idea formation relies on lightweight, flexible information representations characterized by ambiguity and informality.

One strength of the spatial interface paradigm I have adopted in the design of CritSpace is its ability to enable re-contextualization of information with minimal interruptions to the user’s workflow—allowing users to rapidly form and reform tentatively posed relationships through the juxtaposition of visual content. By encouraging users to rearrange objects freely in a two-dimensional workspace, CritSpace provides a lightweight interface for organizing large amounts of information. The informality and flexibility of this approach is a key advantage for supporting early stage analysis when researchers are formulating precise definitions of the problems they are trying to solve.

My dissertation research will build on this strength by introducing new mechanisms for lightweight re-contextualization tailored to the needs of people working with visually complex documents. During my formative user study, I will gain a better understanding of how re-contextualization can aid the work practices of people using visually complex documents. This understanding will be encoded formally as specific interaction patterns that will in turn be implemented as extensions to the current CritSpace system. For example, our pilot study indicated that several users needed support for constructing visual glossaries—collections of related objects such as illustrated letters extracted from a corpus early printed books. These objects would be arranged to facilitate the comparison of newly identified content and linked to their original context in order to promote easy access.

4.3 Annotation

It is impossible to discuss decomposition and re-contextualization of documents without also considering user annotations. Annotations play a vital role in the document understanding process as an aid during reading, notes for future reference, clues to other readers, and a step in the incremental formalization of knowledge [19][36]. The current CritSpace implementation allows users add simple annotations to the workspace by authoring notes. Users may also provide more structured annotations by defining and editing the metadata associated with a particular item. In order to allow annotations at a more fine-grained level, we are in the process of developing and integrating an image annotation tool. With this tool, users will be able to identify regions of the image and provide descriptive metadata for those regions.

Seamless integration of annotation tools in ways that reflect the goals and work practices of our user community is a key objective of my research. To achieve this, a major focus of my formative study will be to investigate the types of annotations scholars currently use. Based on the results of this study I will identify patterns of annotation that both translate current work practices into the CritSpace environment and embody new solutions not possible when working with physical media.

In addition to traditional annotation techniques provided by image and textual markup tools, the spatial interface used in CritSpace invites annotation mechanisms similar to those commonly found in spatial hypermedia applications. These include annotations based on visual properties such as border style and background color, as well as the ability to visually associate annotations with one or more items (or no items at all). I expect the user study will confirm the need for both of these types of annotations. The presence of documents with complex internal structure, such as books, in the CritSpace environment will also create the need and opportunity for new types of annotations. For example, while browsing through a book, a user may wish to add an annotation that describes the relationship of a page with other items in the workspace. In order to avoid unnecessary clutter, this annotation should be should be shown only when the page it refers to is visible.

5. WORK PLAN

My research will be conducted in four main phases. During the first phase, I will conduct formative user studies in order to develop an understanding of how people from different domains create, find, and/or use visually complex documents. The second phase will focus on analyzing the data collected from these studies in order to describe interaction patterns that will support common analytical tasks. As these patterns are identified, I will also design lightweight prototype implementations of the patterns. During the third phase, I will implement the proposed design using the CritSpace framework. The fourth phase will consist of a case-study based evaluation of the implemented system. Below, I introduce the collaborators who will participate in the user studies and provide access to example documents before discussing each of these phases in more detail.

Figure 4: An example document from the Ship Reconstruction Laboratory: a page from a dive log showing notes taken underwater.

(Click here for a larger view.)

5.1 Collaborators

A number of research groups and training institutions have expressed an interested in collaborating in this research. Members these groups to provide the initial user populations for our studies and as a starting point to identify other individuals and groups that may also be interested in being involved. To date, the following groups have formally agreed to be involved:

- Cardiovascular Systems Dynamics Lab (life sciences)

- Romo Group (organic synthesis of natural products)

- Ship Reconstruction Laboratory (nautical archaeology)

- Picasso Project (art history)

- Texas Engineering Extension Service’s Urban Search and Rescue training school (TEEX USAR)

- Search Dog Network (canine search and rescue)

The diversity of these research areas (physical and life sciences, archaeology, arts & humanities, and emergency responder training) will provide a broad base for understanding how scholars from across disciplines interact with visually complex documents in their domain. Members of each of these groups create and/or use different types of visually complex documents for different purposes in different environments.

My research will not attempt to develop a complete solution for each of these domains. Instead, by investigating how people from each of these groups works with documents, I will distill common patterns of practice. While not all patterns will necessarily be applicable to all domains, each pattern will reflect work practices observed in different settings. Once identified, these patterns will serve as the basis for designing both general-purpose applications and tools tailored to the needs of specific domains. My dissertation will evaluate the effectiveness of these patterns by implementing them in the context of the general-purpose CritSpace environment. This implementation will be used to conduct case study evaluations to help assess the utility and effectiveness of the various implemented patterns.

5.2 Phase One: Formative Study

The first phase of my research will consist of an ethnographic study of how scholars and practitioners from different domains create, find, and/or use visually complex documents. The primary objective of this study will be to identify common needs and practices that can serve as the basis for designing interaction patterns during phase two. This study will consist of a series of semi-structured interviews during which I will ask participants to describe the types of documents they use and how they use them. These interviews will be recorded (audio) and transcribed. I will photograph documents and work practices that provide insight to the tasks the study participants perform. Each interview will last approximately one to one and a half hours and I plan to conduct about 20 interviews.

I will select study participants who routinely create or use visually complex documents (both modern handwritten documents and cultural heritage materials) as a regular part of their work. Selected participants will be established researchers or practitioners in their fields. For example, to evaluate the use of laboratory notebooks, I will select study participants who are graduate student researchers (who typically create and use laboratory notebooks) and faculty members (who typically supervise students and review their notebooks) rather than undergraduate science students. While this study will involve people from a variety of backgrounds, I will concentrate on scholars working with cultural heritage materials.

Initially, I will select participants from research groups with whom the Center for the Study of Digital Libraries has an exiting relationship. During my interviews with these participants, I will ask for recommendations of other individuals who might be interested in participating in this study. Participants will be drawn primarily from universities within Texas.

5.3 Phase Two: Analysis, Design, and Validation

During the second phase of my research, I will analyze the results of the formative user study in order to design a set of interaction patterns. These patterns will describe solutions to tasks that are common to researchers in multiple domains. The specific format of the pattern descriptions will be developed during this time. This format will include a summary of the analysis task the pattern helps to solve, examples from the formative study that illustrate this task, and a short overview of how this pattern supports the task.

In addition to describing each of these patterns, I will also develop lightweight prototypes to be integrated into the CritSpace environment. Using both the pattern descriptions and the prototypes, I will conduct a second round of interviews with selected members of the original study group. These interviews will serve to validate the design patterns and solicit early stage usability feedback on the prototype implementations. This will help to ensure that the problems the patterns address accurately reflect tasks that the user community needs to accomplish and to prioritize the implementation of these patterns.

5.4 Phase Three: Implementation

During the third phase, I will implement the identified interaction patterns.

5.5 Phase Four: Evaluation

The fourth phase consists of a summative evaluation designed to asses the utility of the implemented patterns. This evaluation will be conducted as a case study involving three to five participants. During the study, each participant will use CritSpace to assist them in research (or other work) of interest to the participant over a two to four month period. I will conduct pre-task and post-task interviews in order to provide qualitative and quantitative feedback on the users’ experience with the system. I will also conduct regular (weekly or bi-weekly) meetings with each participant to obtain qualitative feedback about the system while specific interactions are fresh in the participant’s mind. These regular meetings will also help to encourage participants to interact with the system on a regular basis and allow me to recognize potential problems early on in order to ensure that as many participants as possible complete the study.

Participants will be selected for the study based on the following criteria:

- Willingness to use CritSpace on a project of interest to the participant

- Availability of digital material to be used during the study

- Ability to meet with the investigator regularly during the study

- Suitability of the proposed task to be pursued during the study

During the pre-task interview, participants will be asked to identify a task (or tasks) relevant to their research interests that they would like to work on using CritSpace. Once this task has been identified, I will work with the participant to develop a custom data collection. The participant will also have access to tools to add new content into the system and an interface for reporting bugs and requesting new features. In addition to feedback obtained through interviews, CritSpace will contain a history feature similar to the one found in VKB that will allow both the participant and the investigator to review the history of actions performed in the workspace. A logging mechanism will be available to track specific user interactions.

5.6 Tentative Timeline

Sept ’08 – Dec ’09 Phase One: Formative Study

Jan ’09 – Mar ’09 Phase Two: Analysis, Design & Validation

Apr ’09 – Aug ’09 Phase Three: Implementation

Sept ’09 – Dec ’09 Phase Four: Evaluation

6. References

| [1] | N. Audenaert and R. Furuta, "Annotated Facsimile Editions: Defining Macro-level Structure for Image-Based Electronic Editions," presented at Digital Humanities 2008 Conference, Oulu, Finland, June 25-29, 2008. |

| [2] | C. Alexander, S. Ishikawa, and M. Silverstein. A Pattern Language: Towns Buildings, Construction. volume 2 of Center for Environmental Structure Series. New York, NY: Oxford University Press, 1977. |

| [3] | H.S. Baird, "Difficult and Urgent Open Problems in Document Image Analysis for Libraries," in Proceedings of the First International Workshop on Document Image Analysis for Libraries (DIAL'040), Palo Alto, CA, 2004, pp. 25-32. |

| [4] | M. Bernstein, "Collage, Composites, Construction," in Conference on Hypertext and Hypermedia, Proceedings of the Fourteenth ACM Conference on Hypertext and Hypermedia, New York, NY: ACM, 2003, pp. 122-123. |

| [5] | Y. Chen and G. Leedham, "Independent Component Analysis Segmentation Algorithm" in Eighth International Conference on Document Analysis and Recognition (ICDAR'05), 2005, pp. 680-684. |

| [6] | M. Csikszentmihalyi, Creativity: Flow and the Psychology of Discovery and Invention. New York, NY: HarperCollins, 1996. |

| [7] | A. Dekhtyar, et al., "Support for XML Markup of Image-based Electronic Editions," International Journal on Digital Libraries, vol. 6, no.1, pp. 55-69, 2006. |

| [8] | S. DeRose, D.G. Durand, E.Mylonas, and A.H. Renear, "What is Text, Really?," Journal of Computing in Higher Education, vol. 1, no. 2, pp. 3-26, 1990. |

| [9] | S. DeRose, "Markup Overlap: A Review and a Horse." In Extreme Markup Languages 2004. |

| [10] | R. Dicks, "Third Commentary on 'What is Text really?'," ACM SIGDOC Asterisk Journal of Computer Documentation, vol. 21, no. 3, pp. 36-39, 1997. |

| [11] | D.G. Durand, Palimpsest: Change-Oriented Concurrency Control for the Support of Collaborative Applications. Ph.D. Dissertation, Boston University, 1999. |

| [12] | M. Eaves, R.N. Essick,and J. Viscomi, J., eds. The William Blake Archive. Available: http://www.blakearchive.org/ (accessed 24 November 2007) |

| [13] | V. Elgin, F. LeBourgeois, S. Bres, H. Emptoz, Y. Leydier, I. Moalla, and F. Drira, "Computer Assistance for Digital Libraries: Contributions to Middle-ages and Authors' Manuscripts Exploitation and Enrichment," in DIAL, Proceedings of the Second International Conference on Document Image Analysis for Libraries, Washington, DC: IEEE Computer Society, 2006, pp. 265-280. |

| [14] | K. Kiernan, "Edition Production & Presentation Technology (EPPT)," Available: http://beowulf.engl.uky.edu/eppt-trial/EPPT-TrialProjects.htm [Accessed December 2006.] |

| [15] | M. Feng and Y. Tan, "Adaptive Binarization Method for Document Image Analysis", in Proceedings of the 2004 IEEE International Conference on Multimedia and Expo, 2004, pp. 339-342. |

| [15] | L. Francisco-Revilla and F. Shipman, "WARP: A Web-based Dynamic Spatial Hypertext", in Proceedings of the Fifteenth ACM Conference on Hypertext and Hypermedia, New York, NY: ACM, 2004, pp. 235-236. |

| [17] | E. Gamma, R. Helm, R. Johnson, and J. Vlissides, Design Patterns: Elements of Reusable Object-Oriented Software, Massachusetts - Menlo Park, California: Addison-Wesley Publishing Co. - Reading, 1995. |

| [18] | B. Gatos, I. Pratikakis, and S.J. Perantonis, "An Adaptive Binarization Technique for Low Quality Historical Documents," in IAPR Int. Workshop on Document Analysis Systems, 2004, pp 102-113. |

| [19] | G. Golovchinsky, M.N. Price, and B.N. Schilit, "From Reading to Retrieval: Freeform ink Annotations as Queries," in Proceedings of 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, New York, NY: ACM, 1999, pp. 19-25. |

| [20] | G. Golovchinsky and L. Denoue, "Moving Markup: Repositioning Freeform Annotations," in Proceedings of the 15th Annual ACM Symposium on User Interface Software and Technology, New York, NY: ACM, 2002, pp. 21-86. |

| [21] | H. Hsieh and F. Shipman, "Activity Links: Supporting Communication and Reflection about Action," in Proceedings of the Sixteenth ACM Conference on Hypertext and Hypermedia, New York, NY: ACM, 2005, pp. 161-170. |

| [22] | C. Huitfeldt and C.M. Sperberg-McQueen, "TexMECS: An Experimental Markup Meta-language for Complex Documents," February 2001. [Online]. Available: http://xml.coverpages.org/MLCD-texmecs20010510.html [Accessed December 2006] |

| [23] | S. Impedovo, P.S.P. Wang, H. Bunke, Eds., Automatic Bankcheck Processing. Series in Machine Perception and Artificial Intelligence, vol 28, World Scientific, 1997. |

| [24] | D.J. Kennard and W.A. Barrett, "Separating Lines of Text in Free-Form Handwritten Historical Documents" in Proceedings of the Second International Conference on Document Image Analysis for Libraries (DIAL'06), pp. 12-23, 2006. |

| [25] | K. Kiernan, J.W. Jaromczyk, A. Dekhtyar, D.C. Porter, K. Hawley, S. Bodapati, and I.E. Iacob, "The ARCHway Project: Architecture for Research in Computing for Humanities through Research, Teaching, and Learning," Lit Linguist Computing, vol. 20, pp. 69 - 88, 2005. |

| [26] | A.L. Koerich, R. Sabourin, and C.Y. Suen, "Large Vocabulary Offline Handwriting Recognition: A Survey," Pattern Analysis Applications, vol. 6, no.2, Jun., pp.69-121, 2003. |

| [27] | J. Lavagnino, "When not to use the TEI." [Online.] Available: http://www.tei-c.org/Activities/ETE/Preview/lavagnino.xml [Accessed Nov. 10, 2006] |

| [28] | E. Lecolinet, L. Likforman-Sulem, L. Robert, F. Role, and J-L. Lebrave, "An Integrated Reading and Editing Environment for Scholarly Research on Literary Works and Their Handwritten Sources," in Proceedings of the Third ACM Conference on Digital Libraries, New York, NY: ACM, pp. 144-151, 1998. |

| [29] | E. Lecolinet, L. Robert, and F. Role, "Text-image Coupling for Editing Literary Sources," Computers and the Humanities, vol. 36, no.1, pp.49-73, Feb., 2002. |

| [30] | A. Lemaitre and J. Camillerapp, "Text Line Extraction in Handwritten Document with Kalman Filter Applied on Low Resolution Image," in Proceedings of the Second International Conference on Document Image Analysis for Libraries (DIAL'06), Washington, DC: IEEE Computer Society, 2006, pp. 38-45. |

| [31] | J.J. Leggett and F.M. Shipman, "Directions for Hypertext Research: Exploring the Design Space for Interactive Scholarly Communication," in Proceedings of the Fifteenth ACM Conference on Hypertext and Hypermedia, New York, NY: ACM, 2004, pp. 2-11. |

| [32] | Manmatha R. Rothfeder, J., "A Scale Space Approach for Automatically Segmenting Words from Historical Handwritten Documents," inIEEE Transactions on Pattern Analysis and Machine Intelligence, vol 27, no.8, pp. 1212-1225, Aug. 2005. |

| [33] | C.C. Marshall, F.G. Halasz, R.A. Rogers, and W.C. Janssen, "Aquanet: A Hypertext Tool to Hold Your Knowledge in Place," in Proceedings of the third annual ACM conference on Hypertext, New York, NY: ACM, 1991, pp. 261-275. |

| [34] | C.C. Marshall and F.M. Shipman, "Searching for the Missing Link: Discovering Implicit Structure in Spatial Hypertext," in Proceedings of the fifth ACM conference on Hypertext, New York, NY: ACM, 1993, pp. 217-230. |

| [35] | C.C. Marshall, F.M. Shipman, and J.H. Coombs, "VIKI: Spatial Hypertext Supporting Emergent Structure," in Proceedings of the 1994 ACM European conference on Hypermedia technology, New York, NY: ACM, 1994, pp. 13-23. |

| [36] | C.C. Marshall, M.N. Price, G. Golovchinsky, and B.N. Schilit, "Designing e-Books for Legal Research," in Proceedings of the 1st ACM/IEEE-CS joint conference on Digital Libraries, New York, NY: ACM, 2001, pp. 41-48. |

| [37] | J.J. McGann, Ed, "The Complete Writings and Pictures of Dante Gabriel Rossetti," [Hypermedia archive]. Available: http://www.rossettiarchive.org/ [Accessed Nov. 2007] |

| [38] | D. McKelvie, C. Brew and H.S. Thompson, "Using SGML as a Basis for Data-Intensive Natural Language Processing," Language Resources and Evaluation, vol.31, no.5, pp. 367-388, Sep., 1998. |

| [39] | G. Nagy, "Twenty Years of Document Image Analysis in PAMI", IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 22, no. 1, pp 28-62, Jan. 2000. |

| [40] | G. Nicol, "Core Range Algebra: Toward a Formal Model of Markup" in Proceedings of Extreme Markup Languages, 2002. |

| [41] | W. Piez, "Half-steps toward LMNL." [Online.] Available: http://www.piez.org/wendell/papers/LMNL-halfsteps.pdf [Accessed Dec. 2006]. |

| [42] | M.G. Pimentel, G.D. Abowd, and Y. Ishiguro, "Linking by Interacting: A Paradigm for Authoring Hypertext," in Proceedings of Eleventh ACM Conference on Hypertext and Hypermedia, New York, NY: ACM, 2000, pp. 39-48. |

| [43] | N.B. Rais, M.S. Hanif, and I.A. Taj, "Adaptive Thresholding Technique for Document Image Analysis," in Multitopic Conference, 2004. Proceedings of INMIC 2004. 8th International, 2004, pp. 61- 66. |

| [44] | A. Renear, E. Mylonas, and D. Durand, "Refining Our Notion of What Text Really Is: The Problem of Overlapping Hierarchies." [Online] Available: http://www.stg.brown.edu/resources/stg/monographs/ohco.html 1993 [Accessed Dec. 2006]. |

| [45] | J. Rothfeder, R. Manmatha , and T.M. Rath, "Aligning Transcripts to Automatically Segmented Handwritten Manuscripts," Lecture Notes in Computer Science, vol. 3872, pp. 84-95, Jan., 2006. |

| [46] | B.N. Schilit, G. Golovchinsky, and M.N. Price, "Beyond Paper: Supporting Active Reading with Free-form Digital Ink Annotations," in Proceedings of the SIGCHI conference on Human factors in computing systems, New York, NY: ACM, 1998, pp. 249-256. |

| [47] | S.A. Selber, "The OHCO Model of Text: Merits and Concerns", ACM SIGDOC Asterisk Journal of Computer Documentation, vol. 21, no. 3, pp. 26-31, Aug.,1997. |

| [48] | F.M. Shipman, R.J. Chaney, and G.A. Gorry, "Distributed Hypertext for Collaborative Research: The Virtual Notebook System," in Proceedings of the second annual ACM conference on Hypertext, New York, NY: ACM, 1989, pp. 129-135. |

| [49] | F.M. Shipman and R.J. McCall, "Incremental Formalization with the Hyper-Object Substrate," ACM Transactions on Information Systems, vol. 17, no. 2, pp.199-227, April, 1999. |

| [50] | Shipman, F. Marshall, C. C. "Formality Considered Harmful: Experiences, Emerging Themes, and Directions on the Use of Formal Representations in Interactive Systems," Computer Supported Cooperative Work (CSCW), vol. 8, no. 4, pp. 333-352, Dec., 1999. |

| [51] | F.M. Shipman, H. Hsieh, P. Maloor, and J.M. Moore, "The Visual Knowledge Builder: A Second Generation Spatial Hypertext," in Proceedings of the 12th ACM conference on Hypertext and Hypermedia, New York, NY: ACM, 2001, pp. 113-122. |

| [52] | C.M. Sperberg-McQueen and C. Huitfeld, "Concurrent Document Hierarchies in MECS and SGML", Literary & Linguistic Computing, vol. 14, no.1, pp. 29-42, 1999. |

| [53] | C.M. Sperberg-McQueen and L. Burnard, Guidelines for Electronic Text Encoding and Interchange: Volumes 1 and 2: P4, University Press of Virginia, 2003. |

| [54] | C.M. Sperberg-McQueen and C. Huitfeldt, "GODDAG: A Data Structure for Overlapping Hierarchies," Digital Documents: Systems and Principles Lecture Notes in Computer Science, vol. 2023, pp. 606-630, 2004. |

| [55] | C.M. Sperberg-McQueen, Ed., "TEI Guidelines for Electronic Text Encoding and Interchange." [Online.] Available: http://xml.coverpages.org/teichap31.html [Accessed Dec. 2006]. |

| [56] | S.N. Srihari and E.J. Kuebert, "Integration of handwritten address interpration technology into the united states postal service remote computer reader system," in Proceedings of the 4th International Conference on Document Analysis and Recognition, pp. 892-896, 1997. |

| [57] | Tennison, J. and Piez, W. "The Layered Markup and Annotation Language (LMNL)," presented at Extreme Markup, Montreal, Canada, 2002. |

| [58] | J. Tidwell, Designing Interfaces: Patterns for Effective Interaction Design, O’Reilly, 2005. |

| [59] | R.H. Trigg, "Guided Tours and Tabletops: Tools for Communicating in a Hypertext Environment", ACM Transactions on Information Systems (TOIS), vol. 6, no. 4, pp. 398-414, Oct., 1988. |

| [60] | M.V. Welie, "Pattern Library." [Online.] Available: http://www.welie.com/patterns/ [Accessed Aug. 29, 2008]. |

| [61] | Y. Yamamoto, K. Nakakoji, and A. Aoki, "Spatial Hypertext for Linear-information Authoring: Interaction Design and System Development Based on the ART Design Principle," in Proceedings of the Thirteenth ACM Conference on Hypertext and Hypermedia, New York, NY: ACM, pp. 35-44. |

| [62] | M. Ye, P. Viola, S. Raghupathy, H. Sutanto, and C. Li. "Learning to Group Text Lines and Regions in Freeform Handwritten Notes," in Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), vol. 1, IEEE Computer Society, Washington, DC, pp. 28-32, 2007. |

| [63] | X. W. Yin, A.C. Downton, M. Fleury, and J. He, "A Region-of-interest Method for Textually-rich Document Image Coding," Signal Processing Letters, IEEE, vol. 11, no.11, pp. 912-917, November 2004. |